https://www.w3schools.com/whatis/whatis_ajax.asp

https://en.wikipedia.org/wiki/Ajax_(programming)

AJAX = Asynchronous JavaScript And XML

In practice, modern implementations commonly utilize JSON instead of XML. Ajax is not a technology, but rather a programming concept.

https://ably.com/blog/websockets-vs-long-polling

An overview of Long Polling

In 1995, Netscape Communications hired Brendan Eich to implement scripting capabilities in Netscape Navigator and, over a ten-day period, the JavaScript language was born. Its capabilities as a language were initially very limited compared to modern-day JavaScript, and its ability to interact with the browser’s document object model (DOM) was even more limited. JavaScript was mostly useful for providing limited enhancements to enrich document consumption capabilities. For example, in-browser form validation and lightweight insertion of dynamic HTML into an existing document.

As the browser wars heated up and Microsoft’s Internet Explorer reached version 4 and beyond, the battle for the most robust feature set led to Microsoft’s introduction of what ultimately became the XMLHttpRequest. All browsers have universally supported this for well over a decade.

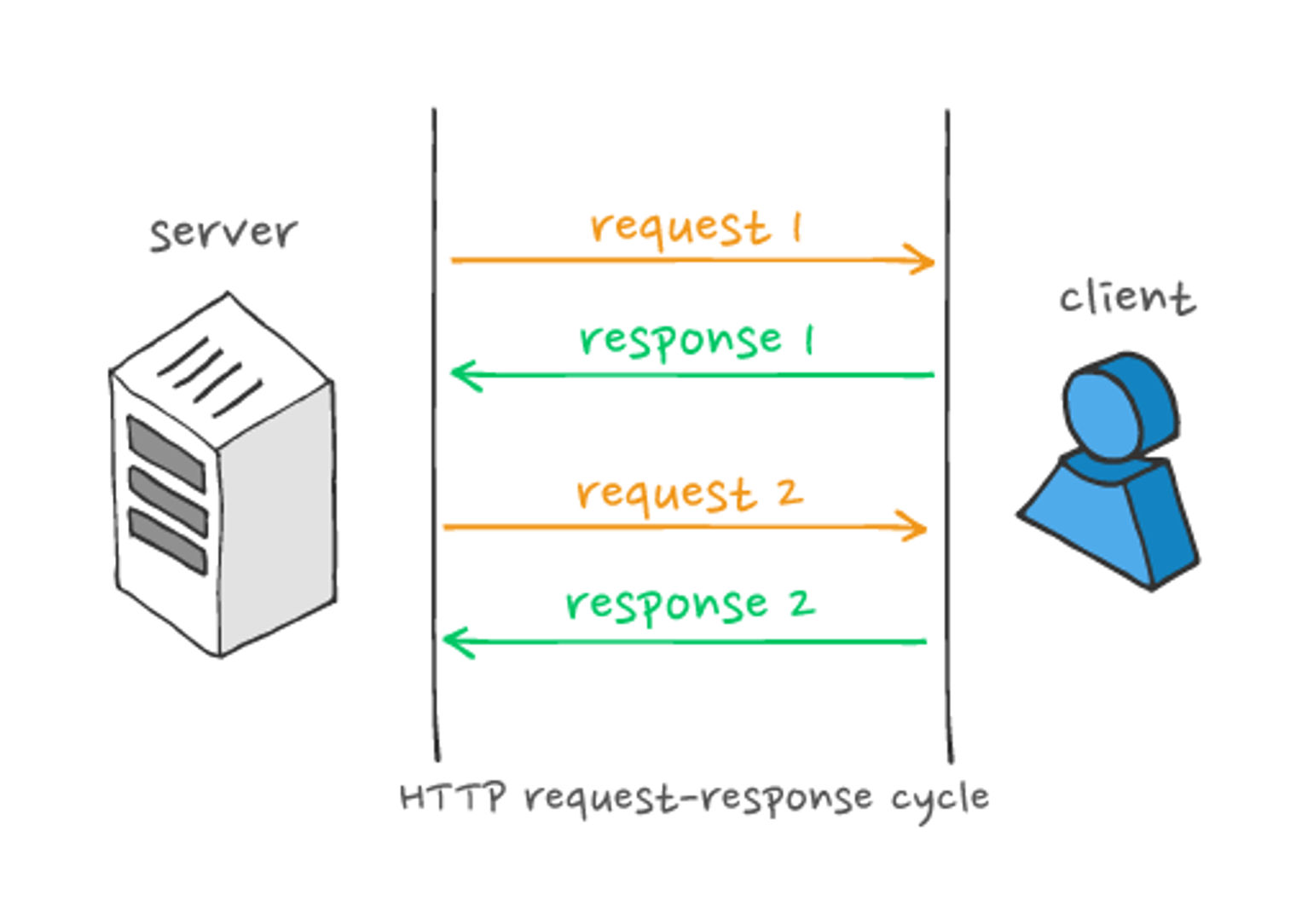

Long polling is essentially a more efficient form of the original polling technique. Making repeated requests to a server wastes resources, as each new incoming connection must be established, the HTTP headers must be parsed, a query for new data must be performed, and a response (usually with no new data to offer) must be generated and delivered. The connection must then be closed and any resources cleaned up. Rather than having to repeat this process multiple times for every client until new data for a given client becomes available, long polling is a technique where the server elects to hold a client’s connection open for as long as possible, delivering a response only after data becomes available or a timeout threshold is reached.

An overview of WebSockets

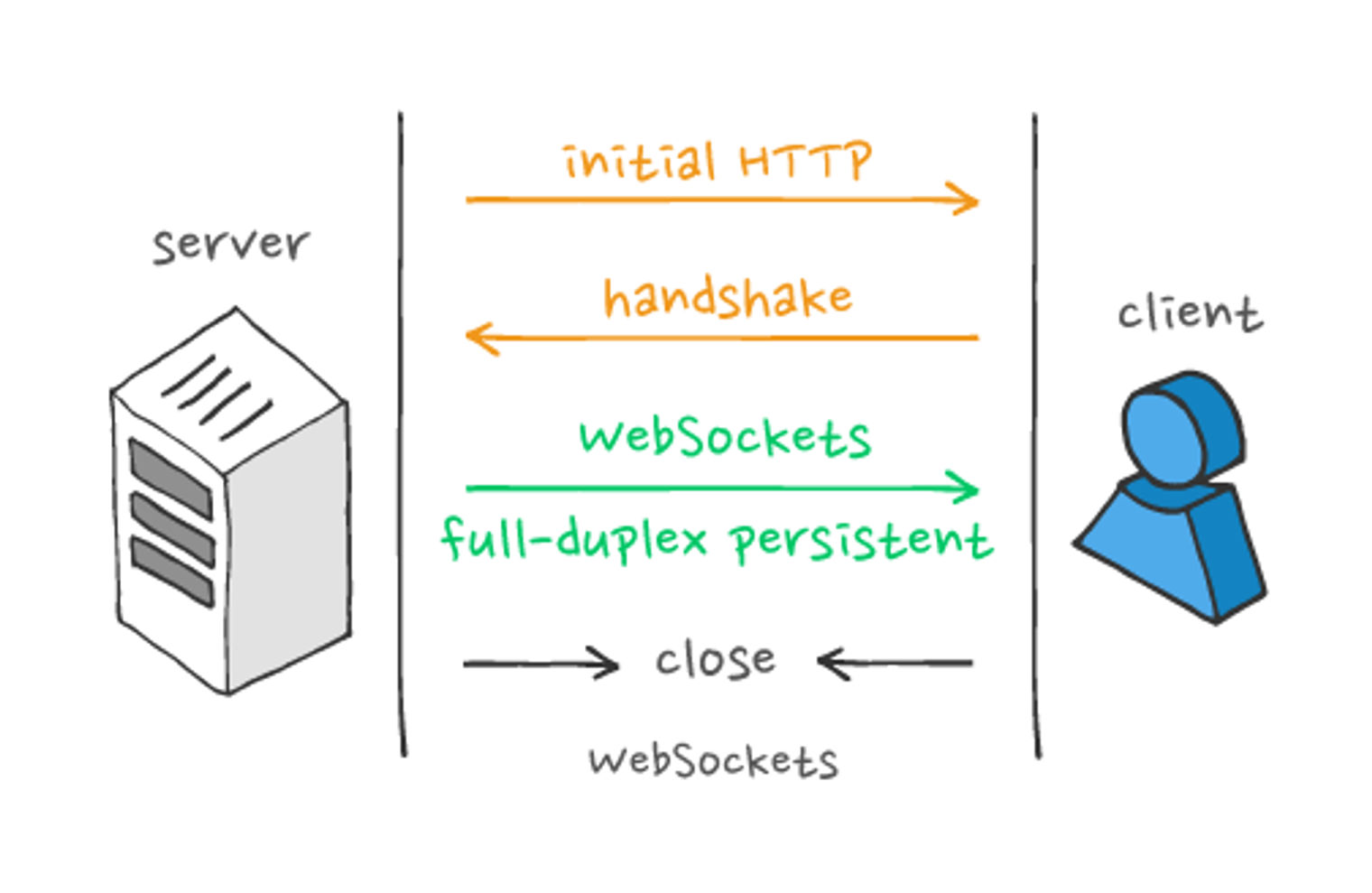

Around the middle of 2008, the pain and limitations of using Comet when implementing anything truly robust were being felt particularly keenly by developers Michael Carter and Ian Hickson. Through collaboration on IRC and W3C mailing lists, they hatched a plan to introduce a new standard for modern real-time, bi-directional communication on the web, and thus the name ‘WebSocket’ was coined.

The idea made its way into the W3C HTML draft standard and, shortly after, Michael Carter wrote an article introducing the Comet community to the WebSockets. In 2010, Google Chrome 4 was the first browser to ship full support for WebSockets, with other browser vendors following suit over the course of the next few years. In 2011, RFC 6455 – The WebSocket Protocol – was published to the IETF website.

Take our APIs for a spinIn a nutshell, WebSockets are a thin transport layer built on top of a device’s TCP/IP stack. The intent is to provide what is essentially an as-close-to-raw-as-possible TCP communication layer to web application developers while adding a few abstractions to eliminate certain friction that would otherwise exist concerning the way the web works. They also cater to the fact that the web has additional security considerations that must be taken into account to protect both consumers and service providers.

Long polling issues

https://stackoverflow.com/questions/21676324/hard-downsides-of-long-polling

Overhead

It will create a new connection each time, so it will send the HTTP headers... including the cookie header that may be large.

Also, just "check if there is something new" is another connection for nothing. Connections implies the work of many items like firewalls, load balancers, web servers ... etc.. Probably, establish the connection is most time consuming thing as soon your IT infrastructure have several inspectors.

If you are using HTTPS, you are doing again and again the most expensive operation, the TLS handshake. TLS performance is good once the connection is established and the symmetric encryption is working, but the process of establishing the connection, key exchange and all that jazz is not fast.

Also, when connections are done, log entries are written somewhere, counters are incremented somewhere, memory is consumed, objects are created... etc... etc.. For example, the reason why we have different logging configurations when in production and in development, is because writing log entries also affect performance.

Presence

When is a long polling user connected or disconnected? If you check for this at a given moment of time... what would be the reliable amount of time you should wait to double check, to ensure it is disconnected or connected?

This may be totally irrelevant if your application just broadcast stuff, but it may be very relevant if your application is a game.

Not persistent

This is the big deal.

Since a new connection is created each time, if you have load balanced servers, in a round robbin scenario you cannot know in which server the next connection is going to fall.

When a user's server is known, like when using a WebSocket, you can push the events to that server straight away, and the server will relay them to the connection. If the user disconnects, the server can notify straight away that the user is not connected anymore, and when connect again can subscribe again.

If the server where the user is connected at the moment that an event for him is generated is unknown, you have to wait for the user to connect so then you can say "hey, user 123 is here, give me all the news since this timestamp", what make it a little bit more cumbersome. Long polling is not really push technology, but request-response, so if you plan for a EDA architecture, at some point you are going to have some level of impedance you have to address, like for example, you need a event aggregator that can give you all the events from a given timestamp (the last time that user connected to ask for news).

SignalR (I guess it is the equivalent in .NET to socket.io) for example, has a message bus named backplane, that relay all the messages to all the servers, as key for scaling out. Therefore, when a user connect to other server, "his" pending events are there "as well"(!) It is a "not too bad approach", but as you can guess, affects the throughput:

Limitations

Using a backplane, the maximum message throughput is lower than it is when clients talk directly to a single server node. That's because the backplane forwards every message to every node, so the backplane can become a bottleneck. Whether this limitation is a problem depends on the application. For example, here are some typical SignalR scenarios:

Server broadcast (e.g., stock ticker): Backplanes work well for this scenario, because the server controls the rate at which messages are sent.

Client-to-client (e.g., chat): In this scenario, the backplane might be a bottleneck if the number of messages scales with the number of clients; that is, if the rate of messages grows proportionally as more clients join.

High-frequency realtime (e.g., real-time games): A backplane is not recommended for this scenario.

For some projects, this may be a showstopper.

Some applications just broadcast general data, but others have a connection semantics, like for example a multiplayer game, and it is important to send the right events to the right connections.

IMHO

Long polling is a good solution for small projects, but became a big burden for high scalable apps that need high frecuency and/or very segmented event sending.

Long polling Client Side Implementation (JS)

async function subscribe() { let response = await fetch("/subscribe"); if (response.status == 502) { // Status 502 is a connection timeout error, // may happen when the connection was pending for too long, // and the remote server or a proxy closed it // let's reconnect await subscribe(); } else if (response.status != 200) { // An error - let's show it showMessage(response.statusText); // Reconnect in one second await new Promise(resolve => setTimeout(resolve, 1000)); await subscribe(); } else { // Get and show the message let message = await response.text(); showMessage(message); // Call subscribe() again to get the next message await subscribe(); } } subscribe();

No comments:

Post a Comment